Surround Sound Mixing

Introduction

Numerous opportunities exist today for up-and-coming audio engineers in the post- production industry, especially with the need to satisfy a cinema theatre audience, as well as consumers possessing a high-quality home theatre system. There has recently been an increase in new post-production studios, especially facilities that are aiming to capitalize on productions with medium priced budgets. With more facilities being launched, the need for multi-talented engineers is sure to escalate in the near future. In this article I will discuss in detail the comprehension, methodology and utilization of surround sound mixing as it primarily pertains to music. I trust this explanation will be beneficial for engineers desiring to enter the field of post-production surround sound mixing.

Historical Perspective

Surround sound mixing has surely evolved since Disneys release of Fantasia (1940); the first surround sound movie ever produced (Fantasound).

Not only has the viewing experience in large cinemas improved impressively over the last 20 years, consumers may now enjoy a fantastic entertaining experience with an affordable home theatre system that features a large viewing screen with a superb surround sound audio system.

The increase in demand for a home theater system is to a certain degree decreasing the desire for consumers to venture out to a theatre to watch the newest film releases.

Major film companies are now often spending between $100 million to $250 million to produce potential Blockbuster hit movies. However these big budget movies are not always translating into financial success. Theres been an implosion where three or four or maybe even a half-dozen mega-budget movies are going to go crashing into the ground, and thats going to change the paradigm, states Oscar winning director Steven Spielberg.

Recent movies like The Lone Ranger, which cost Disney over $300 million to produce and market, has taken in just $147 million worldwide, roughly half of which goes to theater owners. Alternatively there has been a nice surge in low priced films doubling and tripling their initial investment just in the theater release alone.

Film and Pay-per-View companies have started to simultaneously release shows for theatre, cable TV, laptops (smartphones) and DVD-Blue Ray, which has prompted major theatre chains and distributors to be concerned about what is sure to be a potential decline in revenues. I am sure that the initial price of a new DVD-Blue Ray release will continue to drop in price, along with the convenient option of downloading a movie which will encourage viewers to wait until a product/download release, rather than standing in the theater admission line of a movie premiere.

Recently music companies have started releasing back catalogues of famous recordings in surround sound for the car surround sound audio system. The ability to offer a dynamic enveloping aural experience for the automobile is becoming very affordable and desirable. The driver is in total control of setting the volume of the individual channels completely offering a maximum surround sound experience. Several studios in California are now specializing for this potential demand in the near future. I believe it will also be only a matter of time for radio stations to start broadcasting live concerts in surround sound for the car and home. CBC-TV and other cable/TV stations are already offering surround sound programming and more networks with more shows are sure to come online.

And now there is streaming!

Laptops, Ipads, and even smart phones are also beginning to take revenue away from the large theatre business. Several TV networks and cable providers are now selling TV series and movies as downloads for a demographic that are especially comfortable paying additional fees to access high quality content. Young people are now viewing more shows and music videos on their laptops and smartphones, with Youtube now the leading source for free video/music. Most listening is done with high quality headphones and listeners will continue to desire excellent sound, which has been verified by the astonishing sales of Beats headphone products and other listening devices from various top quality manufacturers.

Even though the audio is stereo on many of the shows, the stereo sound the listener is hearing is at times, an offshoot of the original surround sound mix. Most engineers will make certain the quality of the surround sound mix is the top notch and also make certain that the subsequent stereo mix is also high quality.

A recurring comment I hear from young people with laptops is that the quality of the story is a leading factor for their choice in viewing content. For them, if a show features a captivating story, the importance of the quality of video and audio playback will never supersede the importance of the storyline. The recent success of the TV series Breaking Bad clearly demonstrates and verifies this conclusion. A lot of these hit series are released on DVD/Blue-Ray or downloaded and often viewed on laptops with headphones. This might be construed as a concern for the engineer whose priority is creating excellent sound, but the conclusion to be taken is that the consumer is primarily concerned with the quality of the storyline. With this demographic feedback, film and TV producers are becoming further convinced that a great storyline does not require a huge budget to produce. Therefore more possibilities will be undertaken with the creation of more shows, which means extra work for the post-production engineer.

Premium cable channels like HBO, Showtime, Disney and AMC are now offering TV productions for a regular monthly service. This market is proving to be highly profitable and will continue to increase in the future.

With Internet-based content becoming more commonplace, the media company Netflix has introduced streaming, with the concept of viewing content without necessarily having access to a TV/cable network, where subscribers can choose from a database that is instantly accessible via the Internet. Netflix is allowing content that is not linked to cable TV providers and is simply using the Internet. They are growing rapidly, recently passing 38 million subscribers who pay the single-user monthly fee of $7.99. For the first time in TV history, Netflix, an Internet based company has received 14 Emmy Nominations (2013). With this type of recognition, Netflix is sure to be perceived as a serious financial threat by major networks and cable TV conglomerates. Then there is Apple TV, Amazon and many other ventures that are sure to arrive on the scene.

What is of interest here is that a good number of these shows are now mixed and broadcast in surround sound. What are exceptionally exciting are the sports networks live broadcasts, which literally attempt to seat you at the actual sports event. I viewed an NHL hockey game where the excitement of a crowd roar could be heard like I was actually sitting in the arena, where I could detect cheers and applause located all around me.

With low cost subscription rates and access to high bandwidth Internet, most industry executives believe that the market will grow for back catalogues to be visually enhanced and remixed in surround sound. If this business strategy proves profitable, which I believe it will, look for an additional motive for an increase in consumer demand for quality home theatre systems.

For up-and-coming audio engineers who would like to get into post-production, the timing could not be any better than now. While the revenues of the music industry are steadily declining, the opposite is happening in the visual media and video game industries. What now qualifies, as popular visual media are; movies, documentaries, TV series and sports entertainment. Major music recording studios are declining in numbers while many new audio post-production facilities are being built at a substantial rate.

The days of requiring millions of dollars for starting up a new audio post-production facility are unwarranted, where today the entrepreneurial engineers financial needs for starting a facility is within financial reason.

Al Omerod, winner of four Geminis, left Deluxe Film Studios in 2006 in Toronto to open his own production facility, Post City Sound, a venture, which satisfies most of his boutique clients, needs for a fraction of the costs of a major audio post-production facility. Post City Sound is only one of the many newer smaller post-production facilities that are proving profitable in the expanding market for medium budget productions. The larger facilities such as Technicolor and Deluxe Film House are simply too expensive for smaller yet numerous film/TV production companies.

With the economy looking positive in the next few years, I believe that home theatre systems will dominate the consumers home electronic purchases. If we take Rogers Cable TV as an example, consumers need only purchase a home theatre system, and be able to download movies, in order for them to have all the flexibility of a DVD, except for the ownership of the hard product of the content. Teenagers, who represent a large purchasing demographic for the entertainment industry will be lured to this new model of supplying exciting entertainment, not as a hard product but as a streaming service that can be purchased for a monthly fee from their local cable TV or an Internet based company like Netflix. If this becomes the model, then the demand for consistent, high- quality entertainment will increase even more once bandwidth is increased and the industry is suitably monetized. When this occurs, more medium-sized production facilities will employ more talented individuals who plan careers in the audio post- production industry. Executive producers will no longer be burdened by the expensive technical services and production costs for films/TV from high-priced facilities, when they can attain 90% of the production quality at a substantial reduction in costs from medium priced post-production facility. The reality of the 10% loss of quality by using a medium priced facility rather than an expensive facility cannot be detected by over 90% of the preferred clientele. The small number of viewers that can detect the 10% drop in quality can only do so in a large cinema setting. Research has also demonstrated that the slight drop in quality can be scarcely detected on a high quality home theater system.

The film/TV industry is also experiencing a developing trend where clients go to smaller independent music facilities for music mixing and end up having the facility complete all of their post-production including final mixing. These smaller facilities are accomplishing this work by utilizing affordable software such as Logic/Final Cut/AVID/Pro-Tools systems.

Will this be the end to quality filmmaking and TV production? I believe it will not! With technology like Final Cut Pro, AVID, HDTV creating excellent productions for Home Theatre playback systems, films will nevertheless look and sound exceptional in the comfort of ones own home. Lets face the undeniable fact that digital downloading is deeply affecting the purchasing habits of the average music listener. Never has so much music been accessible for an affordable price. In typical listening situations, the consumer cannot tell the difference in audio quality between an Apple-ITunes download and a wave file, nor are they concerned, as long as its a good song. It is the quality of the content that is more essential to them than the quality of the audio, which is also why the buying public is mainly interested in purchasing just singles. The preference now of the consumer is to pay $1 for a song instead of paying $15-$20 for an entire album that might have only two or three good songs.

Consider the DVD/Blue Ray. There has been an incredible resurrection of older movies, where nostalgic libraries are being upgraded with improved colour correction and enhanced audio. Do these films look and sound spectacular? Not really, for it does not really matter to the average consumer? Most people will always prefer a superior storyline and excellent music above technical quality. Box office receipts have declined in the last few years and I believe this is due to the lack of good content in films no matter how amazing the film looks and sounds in a large theatre. Of course there will still be blockbuster hits where the visual effects are stunning and the sound very dynamic, but these hit movies will be fewer and far between. By now some of the film companies that produce these blockbuster are losing potential income to consumers who desire to watch and listen to good content in the comfort of their own homes.

In reviewing the qualifications of good story content, test yourself and watch and listen to a movie produced over 30 years ago, such as The Godfather or Lawrence Of Arabia, compared to the recent release of Iron Man 3 or The Lone Ranger. The individual scenes of the classics lasted as long as 20-30 seconds, where the movies today, have on average an edit every 4-6 seconds. These movies featured actors who could truly perform, where cinematographers relied more on visual imagination, and the composers had to score music with full orchestras for longer scenes that needed to embrace the emotional interest of the audience. The acclaimed director Martin Scorcese recently listed his all time favourite 10 movies, and it was interesting to note that all the movies he chose were all made before 1968. Even pay TV shows are reverting back to an era where excellent writing and superb acting are being showcased. Recent Neilson ratings clearly demonstrate and confirm this new reality and executive approach with the recent remarkable success of shows like House Of Cards, Breaking Bad and Boardwalk.

With this new innovative business strategy, we should see further growth in the cinema film, internet and pay-per-view TV industry, where quality content will require consistent excellence along with the need for numerous choices in the various genres of productions. With the increase in Internet bandwidth, larger high-resolution picture and quality surround sound, the demand for first-class visuals and outstanding audio will be indispensible in engaging and retaining the consumers interest.

The consumer is becoming more accustomed to enjoying their entertainment in a comfortable home environment, be it through head phones with a laptop or viewing a large LCD screen with surround sound. Members of a family can now watch shows at various times and use the pause button for kitchen and bathroom breaks and not be forced to pay extravagant prices for confectionary items that they would normally pay at a theater. Theatre chains have tried to keep attendance elevated with improved quality seating, superior sound and alcohol services, but this costly upgrade of services is affecting their financial bottom line. Watching a movie in a large theater is very entertaining but also has its shortcomings; I personally loathe the sound of people continually chatting, eating chips, blocking my view, stepping on my toes and not getting the snacks I want. Some people say this is a trivial concern, but from my experience, watching and listening to Superman and Batman were just as pleasurable on a superb home theatre system than in the theater, where lining up for an hour in January is not my idea of relaxation and entertainment. The idea of spending $4,500 on a home theatre system with a 52-inch TV screen seems like a wise long-term investment to me.

The key argument here is; do home theatre systems that are becoming more inexpensive and enhanced for the average consumer, out weigh the advantages of going out to a movie theater anymore.

Is it better to wait a few weeks after the premier and watch the movie at home? Some may argue this point, but the fact is, the trend is starting to favour more home theater systems.

Video Game Industry

The most successful financial contributor of the entertainment industry is now the video game market, surpassing the movie and music industry combined. In 2011, the global video game market was valued at US$65 billion. The Video Game industrys is rapidly establishing itself as the single most exciting and vigorous creative industry around: a sector able to claim not only booming revenues and growing audiences, but a multitude of talents and new ideas that is increasingly attracting some of the foremost influential figures in film, television, music and the other arts. The release of the game Call Of Duty: Black Ops took in an astounding $650 million in its first five days alone. Recent studies show that 67% of all homes in the USA now have a video game system and that females make up a surprising 40% of the participants. Games like Nintendos Wii, Sonys PlayStation and Microsofts Xbox are now becoming standard household items in the home of almost every teenager. Sonys PlayStation 2 has sold over a record 165 million units alone. Most of the recent versions of these games can now play Blue Rays, HD-DVDs and offer 3D graphics.

Canada is presently one of the key leading game developers and publishers in the industry with major corporations such as Ubisoft in Montreal and Electronic Arts in Vancouver. With the Quebec government offering generous financial subsidies for the industry, Montreal has recently confirmed that over 2,000 people are now directly employed in the Video Game industry. Ubisoft a Global player whos largest development studio is in Montreal, has recently opened an additional office in Toronto where they are focused on developing Tom Clancys hit Splinter Cell 6.

With this movement and surge in the Video Game industry there will be an enormous and escalating need for audio designers and audio engineers. These engineers will have to be very knowledgeable and proficient in surround sound mixing.

Concert TV

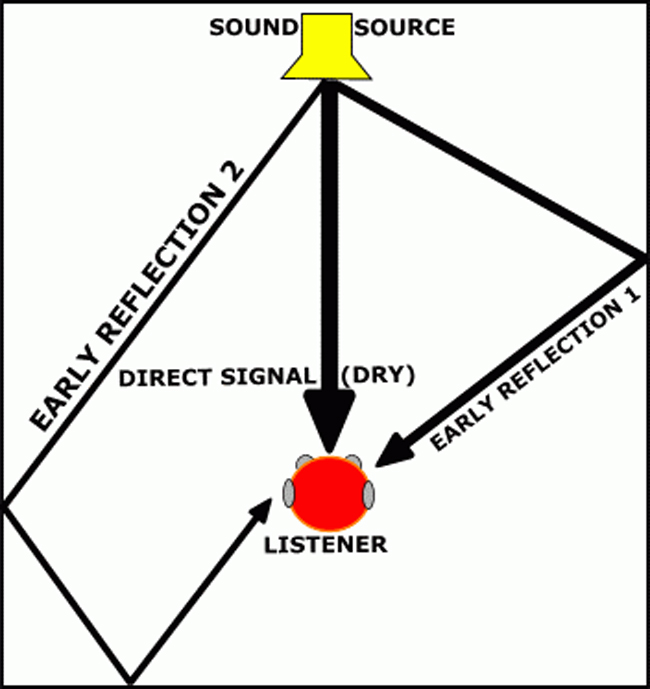

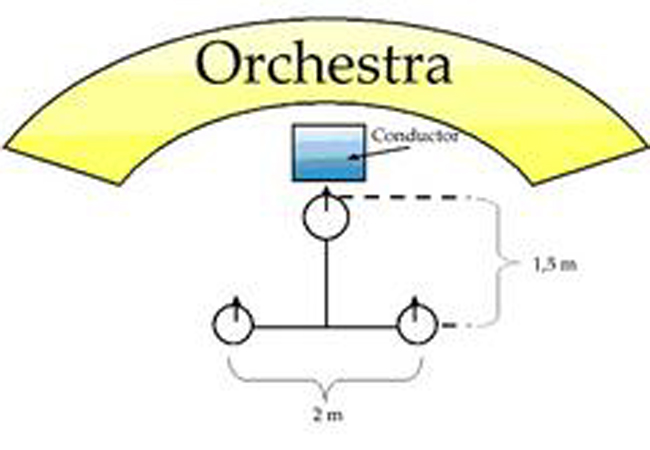

Concerttv.com is an innovative cable station totally dedicated to broadcasting music concerts that have been pre-recorded, edited and mixed. The original recording is recorded with close mics and ambient mics and remixed for surround sound listening. This specialty channel now offers all types of genres and already possesses a hefty back catalogue of shows ready to be remixed for surround sound. The newer shows are mixed with the vocals and musicians panned across the front channels with the rear channels dedicated for the ambience of the environment with associated crowd responses.

More cable TV providers are getting into the action and this format presents an additional opportunity for up and coming engineers desiring careers in music mixing for surround sound.

So what has this all got to do with mastering surround-sound mixing?

As previously stated, the demand for large costly production facilities needed to achieve a fantastic sounding mix has decreased in the last five years and is likely to lose market share to smaller production facilities. The smaller facilities are decently priced and posses similar professional standards. This allows the up-and-coming engineers more employment opportunities in the expanding growth of medium priced audio post- production facilities. These engineers are now better positioned to demonstrate their creative talents and potential and even launch their own business ventures.

I know of a talented young mixer at small post facility in Toronto, who recently is mixing three shows per week for TV and who has recently engineered with me on large surround sound film projects. All of this work was done on a Control 24, Pro Tools HD, Waves plug-ins, Final Cut Pro, 42 LCD monitor, with a Tannoy surround sound monitor system. Recent graduates of Audio Post Production schools have consistently demonstrated their abilities to combine numerous technical skills with intrinsic creativity to meet the future demands of the audio post-production industry. How are they achieving this? Through continuous monitoring of the future needs of the industry in order to stay well informed of the innovative trends that will fuel the increasing demands of consumer entertainment.

The wider latitudes and options in surround sound mixing are opportune incentives for the mixing engineer to be more creative and inspired in elevating audio to higher standards that will have greater appeal for the consumer. When approaching a 5.1 surround sound project, I personally try to envision the sound of the final product before I even begin mixing, an approach that has worked well for me in the past when mixing in the stereo format. I always ask myself how should I record audio knowing that I will have a surround sound template to fill? What signal processing and effects will I need to employ? What will be the focus in the mix, and how can I ensure the quality of the surround-sound mix for the cinema to be as enjoyable for the consumers home theatre system? In this article I am going to explore surround sound recording and mixing with; 1) analysis of conventional methodology, 2) endorse my own personal concepts and recommendations, which will solely be a subjective perspective based on how I deal with surround sound and it will at times differ radically from conventional opinions and standardized procedure.

How We Localize Sound (Duplex Theory)

If one is to maximize the effects of localizing audio in surround sound mixing, one must first investigate how humans perceive localization of a sound source. Listening to a sound source and verifying its location is determined by the position of the head relative to the sound source (the Direct Path). When the sound arrives to both ears, the time, frequency content, and amplitude will be different between the left and right ears.

It is important to confirm that a sounds frequency content lessens over distance, particularly the higher frequencies, due to atmospheric conditions.

A sound will reach the ipsilateral ear (the ear closest to the sound source) prior to reaching the contralateral ear (the ear farthest from the sound source). The difference between the onset of non-continuous (transient) sounds or phase of more continuous sounds at both ears is known as the interaural time delay (ITD).

Similarly, given that the head separates the ears, when the wavelengths of a sound are short relative to the size of the head, the head will act as an acoustic shadow, attenuating the sound pressure level of the waves reaching the contralateral ear. This difference in level between the waves reaching the ipsilateral and contralateral ears is known as the interaural level difference (ILD).

When the sound source lies on the median plane (center), the distance from the sound source to the left and right ear will be identical; thus the sound will reach both ears at the same time. In addition, the sound pressure level of the sound at each ear will also be identical. As a result, both the ITD and ILD differences will be zero. As the source moves to the right or left, ITD and ILD cues will increase until the source is directly to the right or left of the listener respectively, where the ITD and ILD will cease to be as influential in localizing a sound source. (e.g. ±90 degrees azimuth).

Similarly, when the sound source is centered directly behind the listener, both ITD and ILD will be zero, and as the sound moves to the right or left, ITD and ILD cues will increase until the sound source is directly facing the left or right of the listener, where the ITD and ILD will cease to be as influential in localizing a sound source. (e.g. ±90 degrees azimuth).

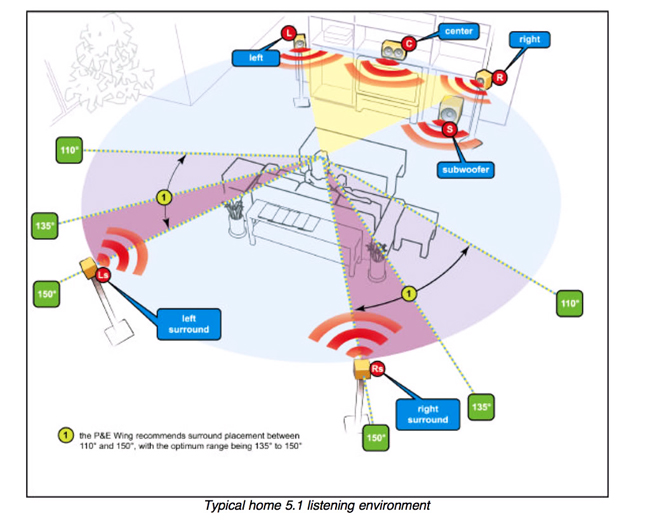

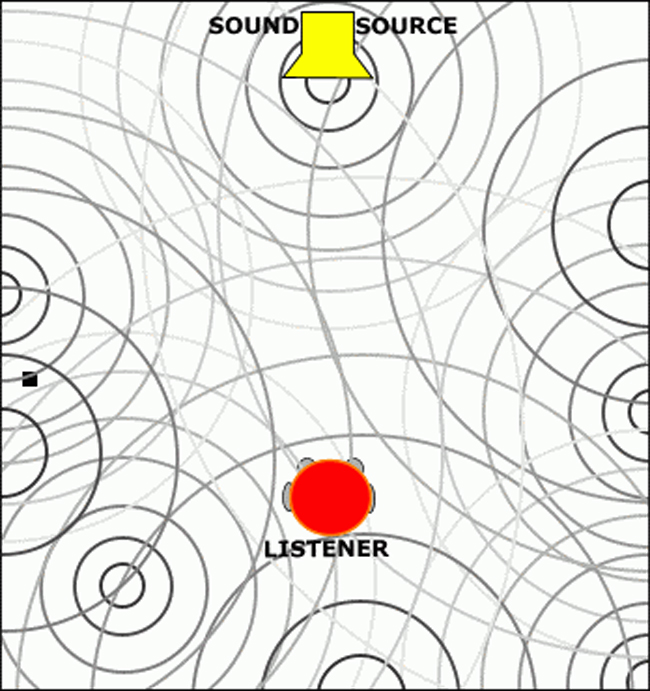

Figure 1: (Duplex Theory) Localization of a sound source. ITD and ILD

Separation of ITD (time) and ILD (level) Cues

Although this Duplex Theory incorporates both ITD and ILD cues in localization of sound, the cues do not necessarily operate together. ITDs are prevalent primarily for frequencies lower than 1500Hz, where the wavelength of the arriving sound is long relative to the diameter of the head and where the phase of the sound reaching the ears can be clearly determined for localization. For wavelengths smaller than the diameter of the head, the difference in distance is greater than one wavelength, leading to an unclear condition, where the difference does not contribute to localization of the sound source. In this situation, it is possible to have many frequencies above 1500 Hz arriving in phase to the ears (e.g., the frequency 1500Hz can also be in phase with 3kHz, 6kHz and 12kHz for both ears). This will cause inaccurate localization.

For low frequency sounds in which the ITD cues are prevalent and the waves are greater than the diameter of the head, the sound waves experience diffraction, whereby they are not blocked by the head, but rather bend around the head to reach the contralateral ear (omnidirectional) for localization purposes.

As a result, ILD cues for low frequency sounds will be as large as 5dB. However, for frequencies greater than 1500 Hz, where the wavelengths are smaller than the head, the wavelengths are too small to bend around the head and are therefore blocked by it (e.g., shadowed by the head). As a result, a decrease in the energy of the sound reaching the contralateral ear will create an ILD location cue. (See Fig. 1) To conclude, identification of a sound source is determined by the difference in time and phase relationship, as well as amplitude.

In Figure 1, we see that early part of the phase of the signal will arrive at the right ear before the left ear (ITD). The level of the signal will be louder in the right ear than the left, and the mid- high frequency content of the original signal will only arrive at the right ear.

Precedence Effect (Hass Effect)

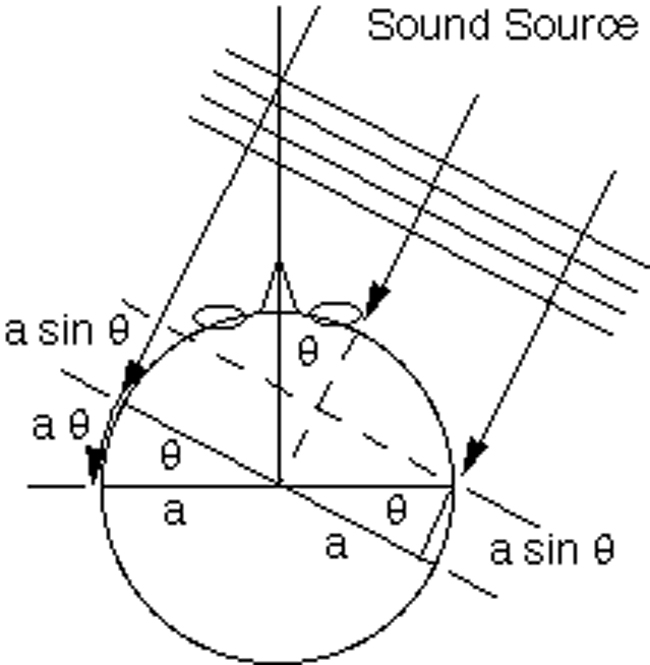

The auditory system of the ear can clearly localize a sound source in the presence of multiple reflections and reverberation. In fact, the auditory system combines both direct and reflected sounds in such a way that they are heard as a localized event and the localization of the direct sound has been determined by the precedence effect, also known as the Haas effect or the law of first waveforms. The precedence effect allows us to localize a sound source in the presence of reverberation, even when the amplitude of the reverberation is greater than the direct sound. Localization is based on the time difference between the left and right ear of the arriving sound event. (Figure 2) Of the various experiments that investigate the precedence effect, the most common exercise positions a to have a listener in front and between two loudspeakers placed in a triangular setting, in an anechoic or a very dead sounding environment.

One loudspeaker is used to deliver the direct sound while the other loudspeaker delivers a delayed replicate of the direct sound, thus simulating a slight delay. Such studies indicate the following: 1) If a direct sound event is generated simultaneously with identical amplitude in both the left and right loudspeakers, then a single sound source (virtual source) will be perceived by the listener at a location point centered exactly between the left and right loudspeakers. (Phantom center mono position) 2) When the direct sound and the delayed sound are of equal volume but the delayed sound one is increased from 0.1msec to 1 msec in the right loudspeaker, the perceived location of the sound source starts to move towards the left sound loudspeaker (direct) and this is known as summing localization.

3) When a delay between 1msec and approx. 15msec of the original sound is delivered form the right loudspeaker, the sound source is perceived as directly coming from the left loudspeaker even though the volume levels are identical between the left and right loudspeakers 4) When the delay in the right loudspeaker exceeds 15msec, the direct sound is precisely localized in the left loudspeaker; however and delayed sound (right loudspeaker) is also now localized as a distinct sound and is perceived as an early reflection of the direct sound creating a sense of distance and ambience.

5) If the sound source in the right loudspeaker is delayed between 1msec-15msec from the from the same sound source of the left loudspeaker and is louder in amplitude (+3db to +6db), the listener will perceive the sound as coming from the left loudspeaker even though the right loudspeaker is louder in amplitude. The slight difference in milliseconds (1msec-15msec) of an identical sound arriving from both loudspeakers overrides the difference in amplitude between both loudspeakers and will appear to be discretely localized in the non-delay speaker.

The experiments show how we are capable of correctly localizing a sound source in the presence of reverberation, provided the reflection arrives within 15msec of the direct sound. The possibilities for using the precedence effect in widening the image of the dedicated center speaker for surround sound mixing will be explored and experimented later in the article.

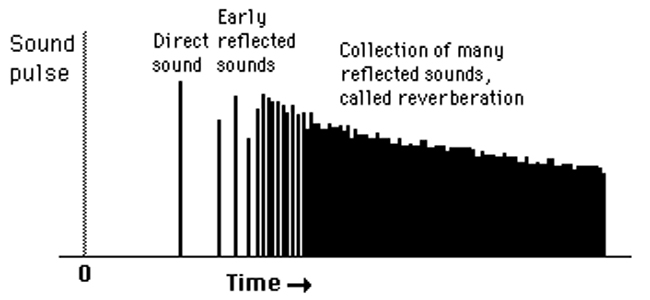

Figure 2: The Precedence Effect (Haas Effect)

Direct Path (Original) Sound

If you were to suspend two individuals ten meters above the ground and three meters apart from each other in an open field, you would be able to set up a situation where they could have a conversation with each other where the only audio heard is via the direct path route.

There would be no floor, ceiling, or walls to reflect the original signal, where each of the two individuals would describe the audio characteristics as being totally dry sounding without the ambience one would hear in an enclosed environment. When the distance between the two individuals increases, the amplitude and frequency response would decrease, due to the inverse law of sound and the absorptive atmospheric conditions.

When one individuals is talking directly on axis to one ear of an individual just listening, the high frequency content of the signal would sound very clear and subjectively described by the listener as emotionally intimate.

Later in the article, I will present a detailed explanation on creating a dimensional effect to achieve an emotional intimate effect between the lead vocalist and the listener.

In an enclosed environment the direct path sound is always the loudest portion of the overall audio experience, where early reflections and reverb are always lesser in amplitude. The only exception is if the direct path between the sound source and the listener would be obstructed.

In an enclosed environment like a performance center, the listener will always look on axis at the sound source; therefore the image of the performer is heard as being dead center no matter what the acoustics of environment are.

First and Early Reflections

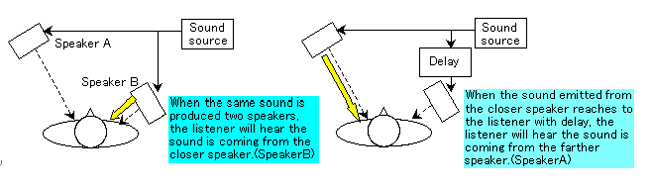

Sound radiating from surfaces in an enclosed reflective environment are known as early reflections (e.g., walls, floor, and ceiling). These reflections contribute to enhancing a sense of dimension in an enclosed reflective environment. Highly sophisticated mathematics and physics are used by studio designers, in their efforts to build excellent sounding recording studios, mixing rooms and live concert venues. (Figure 3) Early reflections typically arrive very soon after the arrival of the direct sound but are lesser in amplitude and frequency content. The length of time difference between the arrival of the direct sound and the arrival of the early reflections will connect to an amplitude decrease, with amplitude decreasing as the difference in time lengthens.

Effective sounding reflections that enhance a listening experience need to arrive to the ear within 15msec-80msec of the arrival time of the direct path and must sound lower in amplitude with less high frequency content. The length of time between the direct sound and the early reflections influence the amplitude and high frequency content of the reflections, with amplitude and high frequency content decreasing over time. Therefore the distance of the reflective surfaces from the listening position effect their amplitude and high frequency content. Reflections arriving to the listener between 15msec (left) and 30msec (right) will be louder and contain more high frequency content than reflections arriving between 60msec (left) and 80msec (right). Left and right early reflections arriving less than 15msec between each other could produce a flanging effect and/or image location difficulties of the original sound source. This effect can be easily produced if one claps their hands and listens for a flutter echo flange within the sound of the echo-delay, an effect caused by 2 or more reflections arriving less than 15msec apart from each other in an enclosed environment with parallel walls. Once the first and early reflections pass the 80msec mark (approx.), they begin to sound detached and discrete from the direct path signal and no longer contribute in influencing a sense of distance and dimension in the overall sound experience. If the sound source is transient sounding in nature, the early reflections are easier to hear and distinguish from each other but might prove to be distracting in a listening experience. Later we will look at how first and early reflections can perform a role in creating a sense of distance and dimension in surround sound mixing. Early reflections continue to multiply over time until there are so many of them they will eventually be perceived as reverb.

Early reflections result from the room surfaces (e.g. walls, floor and ceiling) and are known as the early reflections. They typically arrive very soon after the arrival of the direct sound but are lesser in amplitude and frequency content.

The length of time difference between the arrival of the direct sound and the arrival of the early reflections will connect to an amplitude decrease, with amplitude decreasing when the difference in time lengthens.

NB: The transient nature of the original signal will influence the 15msec to 80msec range for replicated transient reflections. A transient snare drum may begin to sound discrete in the 50msec-80msec range, where a smoother sounding instrument, such as a cello or flute, will not generate reflections that will begin to sound discrete from the original sound source until at least approx. 100msec. The amount of high frequency content at the front of the original sounds waveform and the tempo of the music are also influencing factors in determining a sense of dimension in an enclosed environment.

Figure 3: Direct Sound and Early Reflections

Reverberation

When sound is generated in an enclosed environment, multiple reflections become so abundant they will eventually merge into a highly diffused sound know as reverberation. This is most noticeable when the original sound source discontinues performing and the original sound continues to reflect from all surfaces, finally developing into reverb, which eventually decreases in amplitude until sound can no longer be heard. The time it takes for the sound pressure level of the reverberation to drop or decay by 60 decibels from the level of the original sound is known as the reverberation time, or RT-60.

As shown in Figure 4, in an illustrative listening environment, sound waves emitted by a source reach the listener both directly, via the on axis path between the source and the listener, and indirectly as reflections from walls, floor, ceiling, or any other reflective obstructions. This collection of reflected sound waves, which may total several hundred, eventually develop into reverb.

The collection of reflected sound reaching the listener varies as a function of the shape of the room, as well as the materials from which the room surfaces are constructed of (absorption coefficients) and the frequency content of the original source. Reverberation can also be used as a cue to source distance estimation and can present evidence on the physical make- up of a room (i.e., size; types of surface materials used on the walls, floor, and ceiling).

The number of times a wave is reflected before it reaches the listener is known as its order. The direct sound has an order of one; of sound arriving to the listening position. In a typical situation, the number of reflections will eventually generate into several hundred. A reflected wave is represented by its order of multiple reflections. In many situations, a higher reflection order indicates a reduction in the intensity level due to absorption by the reflecting surfaces and the inverse square law characteristics of the propagating waves.

Figure 4: Direct Sound, Early Reflections, and Reverberation

Reflections arriving later than 80msec, with orders greater than one, are known as late reflections or more commonly as discrete reflections. As the direct path sound decays, the initial sound of the reflections and reverb will sometimes be louder than the decay of the direct sound, thus appearing enmeshed or at times detached from the decay of the direct sound. Late reflections, arising from reflected reflections from one surface to another, are assumed to arrive equally and diffused from all directions with even amplitude to both ears and can be described as exponentially decaying sound or otherwise reverb. (RT-60) (Figure 5).

The reverberation term RT-60 can be defined as the time required for the sound pressure level (SPL) to decay by 60db after the initial burst of sound. The sound characteristics of the reverb depend on the shape of the enclosure, the material of the walls, floor and ceiling, and the number and type of objects in the enclosure. Depending on the level of the background noise, there may be a situation where reflections arriving after RT-60 are still audible. The decision to use the 60db figure was selected with the implication of a suitable first-rate sounding environment such as a concert hall. In such a situation, the loudest amplitude reached for most orchestral music is approx. 100db (SPL), while the level of background noise is approx. 40db. As a result, a reverberation time of 60db can be seen as the time required for the loudest sounds of an orchestra to be reduced to the level equaling the background noise.

Reverberation time is definitely influenced by the type of reflective surfaces encountered by the propagating waves. When a surface is highly reflective, very little energy is absorbed by the surfaces and the reflected sound will retain most of its energy, with this leading to long reverberation times.

In contrast, highly absorptive materials will absorb a great deal of the energy of a sound wave. When sound comes into contact with the absorptive materials, the energy is greatly reduced in the reflected portion of the sound, thereby reducing the overall reverberation time and amplitude. Late reflections will be become highly diffuses as the distance between the initial sound source and listener increases where the amplitude of the direct sound decreases until it is perceived as equal to the amplitude of the diffused reverb.

If an environment were filled with only with highly reflective surfaces and had no openings for the sound to escape, one could theoretically create an effect in which the sound would seem to last forever, for it is a fundamental law of energy we are concerned with. Therefore one must analyze what is occurring within the sound of reverb while it is decaying. If one analyzes the frequency response of the sound of reverb at the 1sec mark and then at the 3sec mark, one will conclude that over time, the high frequency content will decrease as the reverb amplitude decreases. The extent of loss of high frequency content and reverb time duration would be determined by the absorption coefficients of the reflective surfaces.

Reverberation contributes a gratifying quality to music with the idea of extending the duration of a melodic idea, which is very attractive to in numerous genres of music. Many digital software applications and even home theater system options employ tools that assist in enhancing the quality of music through the additional feature of offering more reverberation.

Today, engineers need a thorough understanding of all the elements that contribute to establishing a sense of distance and reverb dimension in order to create a surround sound mix that realistically emulates the sound experience one would perceive in an outstanding listening environment.

NB: The type of material used on each surface in an enclosed environment (absorption coefficients) dictates the frequency content and amplitude of the reflection/reverb. The softer and rougher the surfaces, the duller and shorter the reverb will be. If the surfaces are made of wood, the reverb will sound warm and not contain as many high frequencies. Concrete and glass produce a brighter reverb with a longer reverb time (RT-60). As the reverb time decays, the high frequency content in the reverb decreases. Across distance and time, the atmosphere absorbs high frequencies, and as the reflections bounce from surface to surface, the reverb diffusion increases. In other words, as the reverb decays so does its high frequency content and amplitude, no matter what type of surfaces are utilized to create a sense of dimension.

Figure 5: Amplitudes of Direct Sound, Early Reflections, and Reverberation

Auditory Distance Cues

The following auditory distance cues perform functions in the perception of the distance and dimension of an enclosed reflective environment, with both the listener and the sound source in stationary positions:

- Intensity of the energy emitted by the sound source (amplitude).

- Reverberation amplitude (direct path energy-to-reverberant energy

- Frequency content emitted by the sound source

- Binaural differences (e.g., ITD and ILD)

- The dynamics of the originating sound

Source intensity (amplitude) and reverberation are thought to be the most effective factors in determining distance between the originating sound source and the listeners position; however, any number of these auditory cues may be present where specific cues may dominate others depending on the type of listening environment. As a result, auditory distance perception may be influenced by such factors as the users familiarity with the rooms reflective surfaces, as well as the quality of the sound stimulus and the distance estimation calculated by the listener. In addition, changes in these cues may not necessarily be caused by an alteration of the distance between the listener and the source, but rather, may result from differences in the spectrum emitted by the source (e.g., the source audio is reduced) or modifications to the source spectrum due to differences in the environment, thereby further confusing matters, which can lead to poor judgments in source distance estimation. As source distance is increased, the intensity of the sound received by the listener decreases. However, intensity of the sound waves received by the listener may also decrease not exclusively with an increase in source distance, but also with a decline in source SPL intensity. In such an ambiguous situation, the user may not necessarily be able to discriminate between the two scenarios. Fortunately, as described below, the presence of other auditory cues may assist the listener in making a precise verdict.

It appears that auditory distance investigations should be conducted in standard reverberant environments. Source distance cues can be divided into two groups, exocentric, and egocentric. Exocentric or relative cues provide evidence with respect to the relative distance between two sounds, whereas egocentric ones provide information about the actual distance between the listener and the sound source. Consider a sound source and a listener in a room where the listener cannot see and does not have any prior evidence concerning source position or distance (Blind test). Now imagine the source distance is doubled. An Exocentric cue uses the decrease in sound intensity between the sound source at the initial location and the sound source at the new location to determine whether or not the distance has increased. On the other hand, with an Egocentric cue, the listener uses the ratio of direct-to-reverberant levels to determine the source is, say, three meters away from the listener. With a firm understanding of the relationship between the direct sound and its associated reflective properties an engineer will be able to determine and also create a listening position in relation to the direct sound source. Therefore the engineer may utilize both Exocentric and Egocentric principles in creating distance and a sense of dimension in the creative archetype of surround sound mixing.

The Waveform

Knowledge of the audio waveform elements e.g., amplitude-dynamics, time duration, and frequency content are imperative for optimizing ones ability to create dimension in surround sound mixing. There are four sections of the waveform to analyze and relate to how generate outstanding sound in a quality enclosed reflective environment.

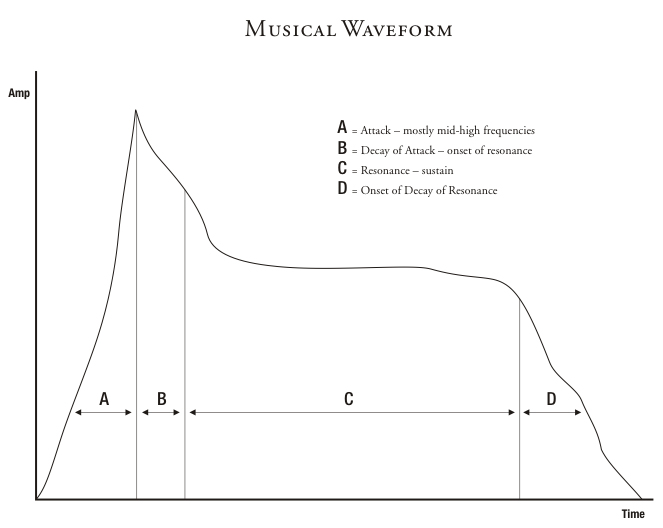

Figure 6: The Audio Waveform

The Attack A

In most audio waveforms, the attack (A-section) is composed of mid-high frequency content with little of the mid-low frequencies that are associated with fundamental music tonality. When analyzing the waveform of a note played on the piano, the first sound one would hear is the attack of the hammers hitting the strings producing overtones that are typically unrelated to each other. This attack would sound very percussive and almost noise like in nature when heard isolated. The frequency content of the A section would generally all be above 2khz.

Once the strings have been struck, they start to vibrate and produce resonance, better known as a musical note (B-section). The strings then excite the sympathetic soundboard, which produces a fuller and louder resonance for the struck musical note and its related overtone structure (C-section). The frequency content of the B amp; C sections would generally be all below 2khz. After the pianist stops playing, there will still be sound in a reflective sounding environment (reverb) (D). The frequency content of the D section would generally be all below 1.5khz.

With a drum, the attack occurs when the stick hits the drumhead. Like the piano, the attack portion of the sound is percussive with noise like characteristics, for two solid objects are coming into contact with each other create to create sound.

After the attack, the top and bottom drumheads will vibrate and generate resonance within the drum. If the drum is in a live sounding recording studio, the drum sound in the room will continue to resonate with early reflections and reverb.

In a lead vocal performance, words beginning with hard consonants like the word Time have no tonal content in the A section of its waveform. Almost all pitch generated by a vocalist is with the sound of vowels (ime). In the word Time the T consonant contains mostly noise characteristics, whereas the vowel I contains tonality, which is a sound defined as musical pitch. In editing dialogue the engineer can precisely take any word that begins with T and by its sonic character, use it in other words in the dialogue that begin with Ts. This is not true for vowels such as O or U for they contain tonality associated as pitch.

With music, the attack A section of the waveform defines the rhythmic element of a performance. If a pianist plays quarter notes at a fixed tempo, a situation could arise where the engineer could desire to alter the pianos waveform so its playing more of a rhythmic role than a harmonic/melodic role in a song. The engineer can achieve the desired effect by manipulating the attack section of the waveform of each note through signal processing such as equalization and compression. In the waveform of the piano, the attack section (A) has considerably more mid-high frequency content than the sustain section (C). Therefore if the engineer boosts frequencies above 2khz then only the attack section of the entire waveform will be enhanced. As the piano chord sustains and then decays, so does its mid-high frequency content and amplitude in relation to the attack section. When the piano is played forcefully with dynamics, the attack portion of the waveform increases in mid-high frequency content in relation to its amplitude. The harder the pianist hits the note, the further brighter the sound of the attack.

An additional technique to create an effective rhythmic role in a song is to use dynamic compression: compress the piano with a med-slow attack time and med-slow release time in order to elevate the amplitude of the attack (A-section) in relation to the amplitude of the sustain section(C). With the additional EQ boost in the mid-high frequency range, the listener will barely notice any tonal or amplitude change in the sustain and decay sections of the waveform (Camp;D). This signal processing procedure is to equalize the uncompressed part of the waveform of the piano in order to enhance the attack section of that is not compressed so that only the rhythmic elements of the piano performance waveform are enhanced.

If one wanted to emphasize the sustain element (C-section) of a piano chord, then dynamic processing of the signal would obviously be different and opposite to the above signal processing. In a production where there is a rhythmic picking guitar, the piano might supply the main harmonic content of the production. In this situation, the sustain part of the waveform (C-section) would have to be enhanced. The engineer will compress the piano with very fast attack and fast release times, as this will lower the amplitude of the attack section (A) in relation to the sustain section(C). And then equalize the sustain section(C) in the frequency range 200hz-2kHz for enhanced musical tonality.

The Decay of the Attack B (onset of resonance)

This part of the signal is a mix of the decay of the attack and the onset of resonance and pitch (B- section). With a piano as the attack part decays, the first sign of pitch begins to become audible. The change from the A to B sections occurs so quickly that it is not noticeable to normal human hearing.

The Resonance/Sustain C

This part of the signal is the sustain portion that contains the resonance and pitch of the sound (Music), and its where vibrato and tremolo occur. It is also here that compression is used to control overall volume management of the sustain portion of the sound in order to minimize random and extreme dynamics, allowing the sound-instrument to be heard more evenly in amplitude throughout the song.

The amount of high frequency content and overall amplitude in the sustain portion of the wavelength does not change as dramatically as the attack portion of the wavelength. The differences in attack amplitudes only subtly influences the frequency content and amplitude of the sustain sound section; where in most listening situations the difference is hardly noticeable.

With a snare drum this is the point in the drum waveform for sample enhancement. Try the following; take your favorite drum sample and remove the attack portion of the waveform (A-section) and trigger the sample with a key input from the original snare drum. This allows one to retain only the attack of the original drum and enhance the attack decay and duration of the sound with the additional sample.

The sample creates an image of louder amplitude through sound duration instead of the sounds peak amplitude, and in all cases, the amplitude of the sample is never as loud as the original snare drums attack. This allows the engineer to create a bigger snare drum sound without having to worry about peak distortion.

This also works with combinations of keyboard instruments. For one harmonic performance I have often linked through midi, a grand piano and a Fender Rhodes. I use the piano for attack (A) and the Rhodes for sustain (C). I then mix both instruments to a balance I desire, where at times I will feature the Rhodes in the verses and the grand piano in the choruses.

The Decay D

This part of the signal is the decay portion that occurs when the source instrument stops performing. Most of the audio content of this decay is the reflective sound-reverb generated in the enclosed environment. In a typical concert hall, the decay can be as long as 2.5sec, but it can be shorter than a 0.33sec in a small room environment.

To conclude, most instruments are capable of providing a combination of roles in music production; rhythmic, harmonic and/or melodic.

Designating which of the three roles an instrument will perform is critical in the stage of pre-production. With approved performances, the alteration of an audio waveform can be used to augment a harmonic, melodic and/or rhythmic idea, which can be further enhanced in the final mixing stage by the engineer.

Breakdown of an Audio Signal in an Enclosed Environment

An audio signal takes numerous different paths in an enclosed environment to reach a listeners ear.

- The direct path signal from the originating source to the listening position.

- The early reflections from walls, ceiling, and floor

- The many diffused reflections emanating from the direct sound and early reflections contribute to what is known as reverberation.

The unobstructed direct signal is always the loudest, and it is the most defined in its frequency response and easy to perceive variations in amplitude. The time it takes for the signal to travel from the source to the ear is determined by the speed of sound (approximately 1 meter/sec). If the direct audio is perceived as located dead center in a stereo or surround image, then the audios arrival time to both ears are identical. (ITD and ILD) If the direct sound is perceived to be coming from the left, the listener will confirm the location of the source; for the audio signal will arrive to the left ear slightly sooner and louder than the right ear (ITD and ILD). If a direct sound arrives from the rear right the listener will also be able to distinguish the correct location through the ITD and ILD process. It is also important to note that if an obstacle blocks the direct sound, the exact location of the sound source is difficult to determine and the listener will have to rely on the early reflections and the reverb to approximate the location.

As distance is added, the sound not only loses amplitude but also its high frequency content because of atmospheric conditions and loss of energy over time and distance. This allows the ear to recognize and conclude that the sound source is moving further away when heard out in the open and in an enclosure.

The first indication of dimension is when reflected sound arrives at a lower level and between 15msec and 100msec from the arrival time of the direct original sound. As previously stated, if the reflection arrives sooner than 15msec, it wont create dimension but will create imaging and exact localization problems. If it arrives later than 100msec, it will be perceived as detached and as a distinct sound experience.

The amplitude, time duration and frequency content of the early reflections and reverb determine the size of the enclosure and the type reflective materials of the enclosure.

In listening enclosures, the first early reflections, will typically arrive from the left and right walls. In most circumstances, the two delay times will be slightly different from each other, yet clearly distinct from the direct signal if they arrive at least 15msec later than the original direct sound. As previously stated, the reflections frequency content is always narrower (less high frequency) and lower in volume than the direct path signal, with the amplitude and high frequency reduction based on the absorption coefficient properties of the reflective surfaces of the enclosure and the distance traveled.

In a situation where the listener is situated at a fixed distance directly in front of a sound source, exactly between the left and right walls of a concert hall, the entire audio experience will contain an early left reflection and an early right reflection. Both reflections will create a sense of distance originating from the sound source and will also contribute to the development of reverb, which adds a sense of dimension. Both early reflections will lose high frequency content for the materials used in the walls of a concert hall are designed to absorb the higher frequencies of the early reflections in order to provide accurate imaging and develop a smooth and warm type of reverb in the hall. If the listener is sitting at distance from the stage but dead center, theoretically the left and right early reflections should arrive exactly at the same time. However due to the shape of the human head and the fact that the head is always slightly in motion, the arrival of the left and right early reflections are not exactly identical at any one given time. There is always a minor difference which results in random fluctuation of arrival times between the left and right reflections to the listening position.

In an example where the originating direct path sound arrives to the listeners ears at 5msec and two early reflections from the left and right walls arrive extremely close together within 25msec of the initial sound burst, there will be a difference of 20msec between the arrival of the direct path sound and the two early reflections.

With the addition of the 2 early reflections arriving at a later time than the direct sound, the listener will perceive that they are listening at a certain fixed distance, dead center from the sound source in a reflective enclosed environment.

If the listener moves a couple of meters from the center position to the left, the left reflection will be louder than the right reflection and the left reflection will arrive to the listener slightly sooner and louder than the right reflection. This will create a situation where the listener will perceive they are close to a reflective surface located to the left of the listening position. There will still be an early reflection arriving from the right wall but it will be slightly later and not as loud as the early reflection coming from the left, Therefore the early reflection coming from the right will only add a sense of dimension and distance to the overall listening experience. The direct sound will still sound like it is heard dead center when the listener is facing directly on axis to the originating direct sound. If the left and right early reflections were swapped with each other, the listening position would be reversed and the listener would conclude that he is situated close to a reflective surface located to his right.

The variables that will influence the distance between the listening position to the sound source and the type of materials the surfaces of the enclosed environment are constructed from are:

- The time differences; between the arrival of the direct sound and the two early reflections and the reverb

- The volume differences; between the arrival of the direct path sound and the two early reflections and the reverb

- The high frequency content differences; between the direct sound, the two early reflections and the reverb

- The reverb duration time of the enclosed environment (RT-60)

These variables can be controlled and manipulated by the engineer anywhere from the original mic placement setup and/or in the final mix where the engineer can alter:

- The timing between the different mic placements executed through moving of the original placement of the audio waveforms

- The different amplitudes of all the various mics

- The frequency content of all elements

- Additional use of artificial reverb.

It is important to understand how the ear determines distance from the sound source especially when the amplitude of the direct sound diminishes and the amplitude of the early reflections and reverb increases in relation to the decay of the original direct sound.

It is important to note that the amplitude and high frequency content of the early reflections and reverb, can never be greater than the amplitude and high frequency content of the direct signal as heard by the human ear in an enclosed environment.

When left and right delays are generated to substitute as early reflections are of identical value and both within 15msec-80msec of the arrival of a mono direct sound, listeners perceive the direct sound and both delays to be coming from one location and all audio will be heard as mono in a mix. When the direct sound source and the two early reflections are only heard in mono, it is very difficult to perceive a sense of distance and dimension that would be better established if the early reflections could be heard in stereo with the original sound source remaining in mono. If the instrument were stereo along with left and right delays (reflections) of identical value, there will be a perceivable yet limited sense of distance.

In a real life listening situation the right reflection and the left reflection would never be identical in time and amplitude for it would be impossible for the left and right reflections to arrive to both human ears at exactly at the same time, same amplitude and with identical frequency content. Thus, if one wants to create dimension in a stereo environment, liberties need to be taken when using digital delay settings to generate left and right early reflections, in order to create a sense of distance and dimension.

One method is to take a stereo-recorded instrument and add two delays (early reflections) of different values that follow the suggested guidelines. If the instrument were a stereo- recorded piano and left and right delays set to 30msec each, the delays would directly follow the original stereo panning of the piano. This method would create an unsatisfactory quasi-sense of distance and dimension.

One method of creating a sense of distance and dimension with a localized mono direct sound source is to place the original signal in the center position and create two delays at least 15msec and less than 100msec later than the arrival of the original sound source. Remember that a delay of less than 15msec from the related sound source will produce phasing and poor imaging effects and a delay of more than 100msec will create the illusion of a separate discrete delay and will not contribute in creating a sense of distance and dimension.

Pan the original signal to the center with the delays panned hard left and hard right at a lower level with some high frequency content rolled off. An Important factor when left and right delays are needed to emulate early reflections is that the delays cannot be of the same value and at least 15msec separately from each other to prevent phasing and image problems. If both of the delays are of the same time value and panned hard left and hard right they will collapse into mono and this will not effectively create dimension. Therefore in creating dimension; set the left delay at 30msec and the right delay at 45msec (or vice versa). Theoretically, the right delay (45msec) should be slightly lower in both volume and high frequency content, but for the purposes of creating dimension, it is unnecessary to apply this theoretical principle, because listeners most likely will not be hearing just one instrument in a mix or be in a listening situation to detect the exact location and frequency content of the delays (early reflections). The dimensional effect created by the 30msec (left) and 45msec (right) delays will greatly over-ride the time difference of the 15msec between the two delays.

If the left delay is 30msec and the right delay is 35msec there will be imaging and possible phasing problems between the left and right delays. If the both delays are quite different in set times (15msec-left and 80msec-right), it will create an unrealistic and undesirable listening environment, as if the listener is situated right next to one highly reflective surface.

To make the listening position appear even further back from two reflective walls, use a left delay of 75msec and a right delay of 60msec, with both delays at a lower level and with slightly less high frequency content than if the delays were set at shorter times. It is important to set the amplitude of the delays at a level where they will be only be perceived as supporting a sense of distance and dimension. If the delays are almost as loud as bright as the direct sound it will make the overall sound confusing and create the illusion that there might be rhythm discrepancies within a performance (flams). As previously mentioned, even though there is a difference of 15msec in the arrival times of the left and right delays, the dimensional effect will greatly override the 15msec time delay difference in relation to the fixed listening position, particularly if the direct sound is panned in the middle. If the sound source is stereo a sense of dimension will also be created. With two delays, and the associated altered frequency response and amplitude settings, a sense of depth and distance is added to the original direct sound to create the dimensional effect. I should also note that the type of volume and frequency of the beginning of the sound envelope of the original sound source, be it a percussive attack or a slow attack must be factored in establishing the time setting of the two delays for it will determine whether the early reflections (delay times) will sound dimensional or unfortunately discrete and messy. Furthermore, the frequency content of the delays will determine the absorption coefficients of the reflective surfaces.

These delays (early reflections) alert the psycho-aural response in a way that tells the listener that they perceive the sound at a set distance in an enclosed reflective environment. When the listener hears only the original sound, without reflections or reverb, the psycho-aural response would suggest that the audio being heard would have to occurring meters apart between the sound source and the listener elevated in the middle of a field at an elevated height. The only sound changes possible would be high frequency content and amplitude depending how far apart the source sound and the listener are from each other.

Reflected audio that sounds dull and dark suggests the listener is in an enclosed environment that has reflective surfaces which absorbs the high frequency content such as wooden walls. Reflected audio will sound brighter if the reflective surfaces are made of something firmer, like glass or concrete. Sound that bounces off surfaces always sounds less brighter than the original sound no matter what type of material the reflective surface is composed of, for every type of surface absorbs at least some high frequency content and amplitude. The duller the sound is of the reflection, the higher the absorption co-efficient of the reflective surface materials.

Discrete delays are easy to localize in the stereo image but will prove to be distracting unless the delays are used to enhance a rhythmic idea from a performance at a fixed tempo. So, if one pans a delay arriving at 200msec or later to the left side, it will be heard distinctly as if its directly and discretely arriving from the left.

This will not help create dimension, for the reflection will sound detached from the original sound source event. In order to add dimension to the sound of a percussive instrument, such as a snare drum, the delays (reflections) need to be in the vicinity of 15msec–50msec because of the transient nature of the sound of the drum (waveform). If the delays are longer than approx. 60msec, they may possibly sound totally discrete, because one would now hear the discrepancy between the transients of the original snare drum and the onset of the transient of the generated delay, which would result in a random and confusing sound. A good rule to remember for adding dimension to percussive elements is this: the faster the attack of the sound envelope, the shorter the delays (reflections) need to be to prevent the discrete delay from creating an overall puzzling and muddled sound. If one wanted to simulate a canyon like echo effect, then feel free to add discrete delays longer than 100msec, but make sure they are duller and at a lower volume than the original sound, as well as at a time setting that is not a rhythmic factor in the tempo of the music (in a rhythmic delay situation, the delay will likely land on a half, quarter, eighth, or sixteenth note of the tempo and will be masked by the rhythm of other instruments, which will make it hard to hear as a rhythmic delay effect linked to the original sound.

The faster the transient of the instrument, the shorter the delay needs to be used to generate early reflections to create dimension in a mix. If the instrument happens to be a piano, guitar or violin, delays can be between, left 15msec-80msec and right 15msec- 80msec, with a 15msec time difference between the left and right delays. For instruments with fast acting transients the delays should be between, left 15msec-50msec and right 15msec-50msec with a 15msec time difference between the left and right delays.

Another application to follow is: when adding longer delay times, dampen the high frequency content of the delay as the delay time increases. If the delays for one environment are 20msec and 35msec and you want to modify them to 50msec and 65msec than remove more high frequency content by lowering the high frequency roll off and mix the delays in at an even lower level. With plenty of instrumentation, the listener will barely notice the minor change of delay times and small change in EQ but will notice a variation in dimension. This technique also creates the illusion that the delays (reflections) have lost more high frequency content because the reflected sound has traveled further than a setting with shorter delays. Removing high frequency content from the delays will also simulate the type of surfaces the reflective walls are composed of.

Most concert halls and performance centers have a variety of reflective surfaces within their environments. In Toronto there are two performance centers used for classical, jazz, soft rock and pop music. Massey Hall is an older structure where the walls are mainly composed of wood and soft plaster. The acoustics of Massey Hall have been described as warm and rich sounding. The other performance center is Roy Thompson Hall, a newer building where the walls are composed of concrete and glass where the acoustics have been described as bright and at times confusing.

Therefore the delays frequency response used to generate early reflections will dictate the type of material of the reflective surface used in the listening environment. The delays times will dictate the size of the hall and how far the reflective surfaces are from the original sound source and the listening position.

Mix engineers have the ability to alter the frequency content and amplitude of the delays in a way that suits them to simulate a type of desired listening environment with a sense of distance and dimension, and the creative use delays works very well with all genres of music.

Stereo Dimension Conclusion

Before we move in to surround sound, a conclusion can be made for creating dimension for stereo mixing by emulating reflections through the use of digital delays. When the mix engineer assigns separate delays (early reflections) to vocal(s) or instruments recorded either in mono or stereo, he can then create a sense of distance and dimension for the original musical performance. The two delays to be generated need to be between 15msec and 100msec and at least 15msec separate form each other. The delay time stings, amplitude and assigned high frequency roll-off of the delays will imply the type of material the reflective surfaces are constructed of and how far the distance of the surfaces are between the listener and the source sound.

Surround Sound

The best sounding mixes in surround have the type of perspective where listeners can visualize distance and dimension in a surround sound listening environment. To achieve this, the mix engineer needs to understand how direct sound, reflected sound, and reverb work in combination with each another. In other words, how can the engineer relate and use this knowledge to achieve a desired dimensional perspective in a surround sound mix? Sound design, ambience, Foley and dialogue are mostly mono or stereo elements then altered and adapted to create a surround sound image. As previously stated, once an engineer comprehends how sound works in a three-dimensional environment he will also have the ability to take mono and stereo elements and generate a surround sound mix.

When sound is projected from a source location, a listener will first hear the direct sound, then early reflections and reverb. Once reflections regenerate where they become so dense that listeners can no longer distinguish them as discrete reflections, these numerous reflections evolve into highly diffused reverb. To create dimension effectively in this situation, one needs to analyze the music in a three-dimensional perspective rather than a two–dimensional one. A listening environment in surround sound will function considerably more advantageously in creating distance and dimension than in a stereo one. Through the resourceful use of signal processing in a mix (amplitude, frequency response, and time duration), the engineer may acquire the rudimentary and essential knowledge that allows for creating distance and dimension in surround sound mixing. However, fundamental laws of physics govern the process of creating a realistic sense of dimension. Therefore to create dimensional aural landscapes, an engineer also needs a fundamental understanding not only of how human hearing relates to sound but also how to manipulate the various functions required in creating dimension for a surround sound mix.

It some cases the techniques required to create dimension in surround sound are very unconventional and remarkably original. As they say, If you want to break the rules, you need to know the rules you are breaking. An outstanding example of being original is in the imaginative utilization of convolution reverb, which can be manipulated to achieve astonishing believable realism.

With a good understanding of the physics of enclosed environments, as well as the fundamental operational principles of audio processing, it is possible to create the illusion of virtually almost any listening environment that can be imagined. First, one needs to know how sound arrives with all its fundamental characteristics from a fixed location to a stationary listening position in an enclosed environment, and then recreate this model with all the dimensional characteristics for a surround sound mix. Figure 10 shows the layout of a concert hall with three different listening positions, A-B-C, all situated at fixed distances from the performance stage. The goal here is to determine which factors contribute to the overall sound experience with the listener seated at the three different stationary distances from the sound source. If a group of musicians are performing on a stage in a performance center, the listener will hear three different aural experiences when seated at the three different distances from the stage. The quality of the direct sound, early reflections, reverb, high frequency content and amplitude will all be considerably different between one fixed listening position and another position. Once an engineer understands why the three listening experiences are different, he will then be acquainted with the knowledge of the fundamental factors and how they differ and relate to each between one listening location and another. The engineer will then be able to manipulate specific audio processing to create dimension, so instead of the engineer (listener) having to change his location of listening positions to hear different dimensional perspectives, he can remain in one fixed position and create distance between the different instruments and vocalist to achieve a sense of dimension between the individual instruments for a surround sound mix. If the engineer can determine which rules of sound that are involved and how they contribute and determine the type of listening experience for each listening position, the engineer will then be able to reverse the situation and mix a performance where the musicians are placed at different distances in the mix from his fixed mixing position (listeners position). So instead of the listener having to move to different positions to hear different characteristics of the overall listening experience, the listener (engineer) can then take this knowledge and situate the listener in one position and have the musicians placed at various distances in the mix. For one example, the engineer can build a mix where the lead guitar sounds like the listener is sitting very close to the stage in the A position, the drums have the listener situated further back in the B position and the synthesizer in the C position therefore creating different distances and perspectives for various instruments in a surround sound mix.

I recently mixed music for the world famous Cellist Yo Yo Ma for a film that presented perspective problems that needed to be corrected from the original mix. The original session was recorded in a concert hall with various mics in various locations. The engineer who recorded the original session followed standard conventional recording techniques that are used throughout the classical music genre.

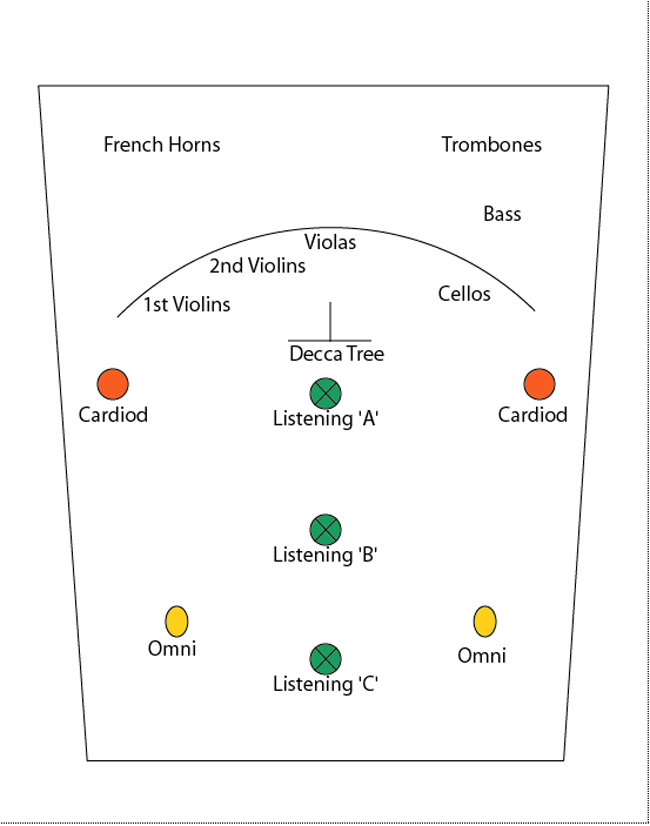

The recording engineer used a close microphone on the cello, a Decca tree, flank mics and ambient hall mics.

Once the engineer obtained all his recording levels he then assembled a mix of all the mics and stuck with one fader setting for the entire recording. The engineer concluded that the best sounding mix consisted mainly the ambient, flank and Decca tree mics, as the best desired balance for the listeners taste (no use of the solo cello spot mic). After auditioning parts of the recording, I convinced Yo Yo Ma that using one fixed fader (location) position that focus too much on the ambient mics for the entire recording was not effective in translating the emotional and intimate feeling of the performance and fell far short of achieving the finest quality of a sound experience for the listener. However I did approve of some of the recording engineers balances of mics for some sections of the recording. Still the problems with the one-fader position for the mix were these:

- When Yo Yo Ma was performing parts of the music score that featured him as a solo, the overall sound on the cello was too distant sounding and lacked presence and intimacy. The sound was also too reverberant and low in amplitude.